September 28, 2020

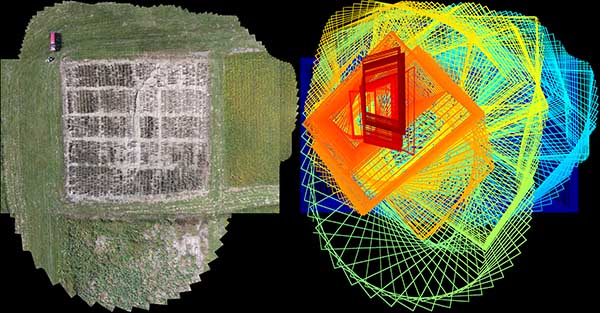

This photo illustration shows how a drone captures aerial video of a farm. A Mizzou research team is devising a way to pull high-resolution images from that video footage automatically.

Imagine being able to assess the health of a single plant in the middle of a field by automatically analyzing a photo of it. The technology exists today by capturing aerial video footage with a drone, but it’s not practical. Now, a Mizzou Engineering team is devising a way to more efficiently create high-resolution panoramic-style images that can be used to make timely decisions on the farm.

“You can get a single photo over a large area by flying higher. But you lose resolution. You will not see the fine detail of plant structures,” said Rumana Aktar, a PhD student in Computer Science. “But analyzing images from a long video sequence is time consuming. It’s difficult to find what you are looking for, such as a particular location or particular date and time. For this process, we take individual frames from the video—which captures the fine details—and we stitch them together. That produces one huge image while preserving the details.”

A Game-Changing Approach

Palaniappan

It’s called Video Mosaicking and SummariZation, or VMZ. Aktar and her PhD advisor, Electrical Engineering and Computer Science Professor Kannappan Palaniappan, developed the video analytics algorithm as part of a larger Department of Defense-Army Research Lab sponsored research project.

What’s novel about the work is that researchers are developing the mosaicking and georegistration process in real-world settings. Using drones available to the public, they’re capturing video over a maize field at MU South Farm. There, EECS Associate Professor Toni Kazic manages about an acre of corn.

The technology could be a game-changer for farmers who currently rely on manual or expensive processes to monitor fields.

“Right now, I have to walk through the field and look at every single plant. And that’s a lot of work,” Kazic said. “To use an image for management problems such as bugs, drought or other stressors, we really need a lot of detail. We can’t capture that from high altitudes without sophisticated, expensive equipment.”

Navigating Challenges

The VMZ mosaicking method is still being perfected. Even then, it won’t be something everyday people can do because of high computational costs. To create a panoramic photo, VMZ can take several hours piecing together high resolution images captured at around 1cm ground sampling distance (GSD). Add in factors like camera calibration, lens correction and moving objects, and the process becomes even more complex.

R. Aktar

To make the final product more accessible, the team isn’t relying on GPS navigation telemetry from the drone. Instead, Aktar uses computer vision techniques to identify salient features in images that persist across time. That way, two images can be matched and aligned in order to overlay or stitch the two images together.

Aktar has developed the VMZ algorithm to do this image matching and stitching automatically. Currently VMZ outperforms both traditional and deep learning methods. However, it is computationally expensive and there are still a lot of challenging factors in the process. Adapting to large structures in the scene with depth requires correcting for motion parallax. VMZ has to adapt the mosaicking process when the drone changes course or rapidly changes the direction the camera is pointing. Even a gust of wind could throw things off.

“Think about the panoramic mode on your smart phone,” Palaniappan said. “You have to hold your hand steady over the full video clip, or else it’s ruined. That tells you how sensitive the current algorithms are to disturbances. Rumana’s VMZ algorithm is much more robust and can easily adapt to small disruptions.”

From the Lab to the Cloud

Imagery from the VMZ system. At left is the actual mosaic output from VMZ. The right half shows the footprint of individual images on mosaic canvas. In the footprint image, the color changes from blue to green to yellow to red as a function of time in the video sequence to increase visual clarity.

The team hopes to eventually offer the technology to farmers through a cloud-based service. Someone could submit video footage from a drone and ask the team to create a mosaic image, analyzing it for specific crop health parameters.

A cloud-based software service would allow farmers to identify and manage problems quickly and affordably. For instance, if a specific section of a field were experiencing nitrogen stress, you could fertilize the impacted area without having to fertilize an entire field. Or, you could pinpoint areas where plants need extra attention, such as pockets of insect damage or drought.

“There are lots of people like me around the world working hard to improve crops to make them more resilient, bountiful and nutritious in the face of climate change,” Kazic said. “All of these people need to be able to look at what’s going on in the field, on the farm or in the orchard more easily and cheaply.”

The technology holds value for Mizzou, too. Researchers from bioengineering, plant sciences, agriculture, wildlife ecology and more are collecting drone footage they can’t keep up with, Palaniappan said.

“There are at least a half a dozen labs on campus collecting drone data with an agricultural focus,” he said. “All of these groups have come to us saying ‘we’ve collected terabytes of information every season for years, and it’s sitting on hard drives since we can’t process fast enough.’ To process one collection can take one to two weeks, and they’ve been collecting drone video every day for a whole season. That’s the volume of big video data we’re talking about.”

Published Findings

T. Kazic

The research team recently described their work in the journal Applications in Plant Sciences. “Robust mosaicking of maize fields from aerial imagery” is in a special issue on Advances in Plant Phenomics: From Data and Algorithms to Biological Insights published in September.

Co-authors include

- Dewi Endah Kharismawati, Master’s and PhD student in Computer Science

- Hadi AliAkbarpour, Assistant Research Professor in EECS

- Filiz Bunyak, Assistant Research Professor in EECS

- Ann E. Stapleton, Assistant Professor of Biology and Marine Biology, University of North Carolina

Read the journal article here.